Automated classification of pre-treatment QA results of VMAT plans with plan complexity metrics

Michaël Claessens,

Belgium

OC-0116

Abstract

Automated classification of pre-treatment QA results of VMAT plans with plan complexity metrics

Authors: Michaël Claessens1, Michaël Claessens2, Geert De Kerf3, Verdi Vanreusel1,4,5, Victor Hernandez6, Jordi Saez7, Núria Jornet8, Dirk Verellen1,2

1Iridium Netwerk, Department of Radiation Oncology, Wilrijk, Belgium; 2University of Antwerp, Centre for Oncological Research (CORE), Wilrijk, Belgium; 3Iridium Network, Department of Radiation Oncology, Wilrijk, Belgium; 4University of Antwerp, Centre for Oncological Research, Wilrijk, Belgium; 5SCK CEN, Research in dosimetric applications (RDA), Mol, Belgium; 6Hospital Sant Joan de Reus, Department of Medical Physics, Tarragona, Spain; 7Hospital Clinic de Barcelona, Department of Radiation Oncology, Barcelona, Spain; 8Hospital de la Santa Creu i Sant Pau, Servei de Radiofísica i Radioprotecció, Barcelona, Spain

Show Affiliations

Hide Affiliations

Purpose or Objective

The objective of this study is to develop and use a machine learning (ML) classifier to solve a binary classification problem between acceptable and non-acceptable VMAT treatment plans. Different plan complexity metrics (PCMs) can be calculated per arc and used as input features. The predicted output can automatically trigger a reduction in the level of complexity of the treatment plan before an EPID-based QA measurement.

Material and Methods

A combined total of 166 VMAT beams that passed or failed the EPID-based pre-treatment QA phase (‘Fraction 0’) were collected from the PerFractionTM (SunCHECK) database. Treatment plans of three different anatomical regions (head and neck (H&N), prostate, and lung) were included, which were optimized in Eclipse v13.6 and clinically approved and delivered. In addition, two different types of linear accelerators were represented: Clinac and TrueBeam (Varian Medical Systems). For all beams, 14 plan complexity metrics were calculated. Subsequently, a random forest (RF) classifier was trained to make a distinction between acceptable and non-acceptable (i.e., failing) beams based on plan complexity. To get insight into the generalizability of the model, it was tested on three independent datasets, collected from different institutions, consisting of VMAT plans with their associated QA results.

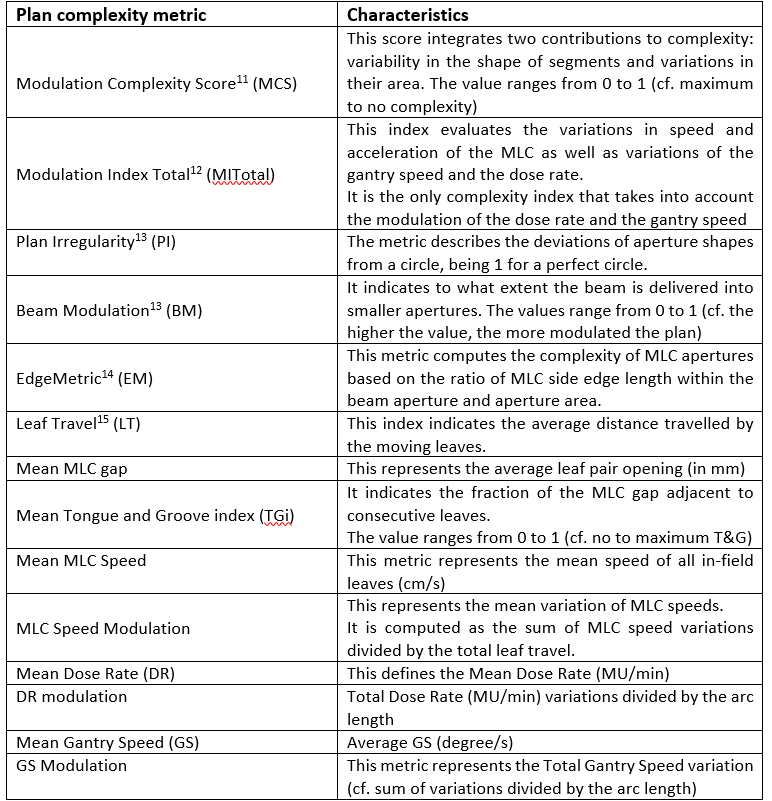

Table 1: Overview of the different plan complexity metrics calculated per beam and used for the creation of the model.

Results

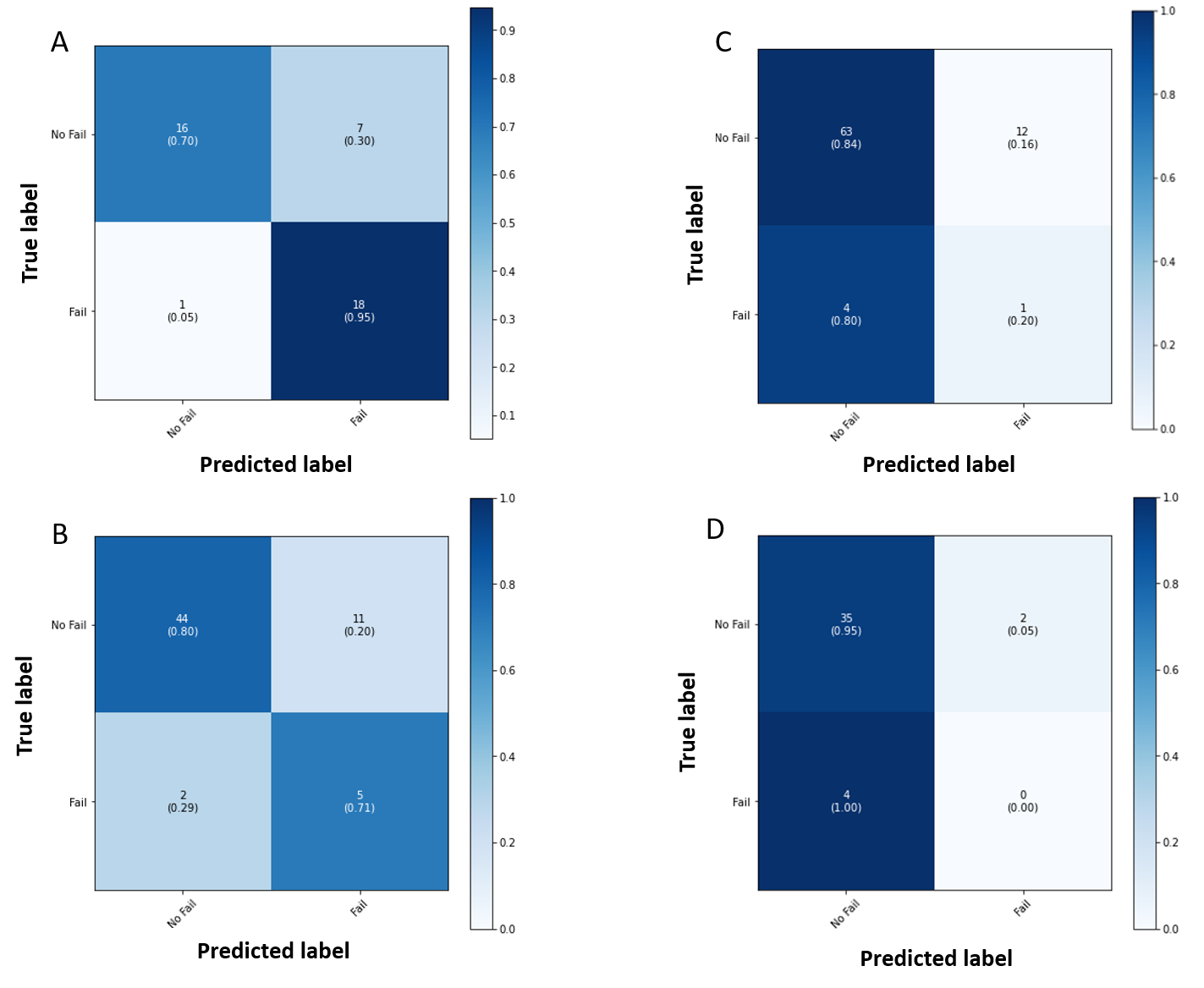

The locally trained RF classifier correctly identified 95% of the beams that failed the local QA , with only a 5% of false negatives. Mean Dose Rate, Mean Gantry Speed, and Dose Rate modulation were found to have the highest correlation to the EPID-based institutional QA results. For institution 1, which has the same QA platform as the local institute, the model could identify 71% of the beams that failed the institutional-specific QA procedures with 29% of false negatives. Institution 2 and 3 used different QA platforms and both datasets were characterized by a strong unbalance between both passed and failed beams according to the institutional-based QA. The local model was not able to make a distinction in complexity determined by the other institution’s QA procedures, which is demonstrated in a high number of false negatives.

Figure 1: Confusion matrices for the four test sets: A) Iridium Netwerk, B) Institution 1, C) Institution 2 and D) Institution 3.

Conclusion

A ML model was developed that can correlate PCM to in-house QA results and classify between acceptable and not acceptable beams based on plan complexity. This approach can help identifying cases that require attention to increase efficiency and streamline the PSQA process. Based on the current external validation, it is advisable to develop in-house algorithms, applying institution specific QA procedures or a combined model with data from a different centre with the same QA platform. Future work might focus on identifying parameters that are required to generalize the model.