The research group of Prof. Marco Riboldi at the Department of Medical Physics, Faculty of Physics of the Ludwig-Maximilians-Universitat Munchen (LMU), offers a PhD position in the framework of the DFG funded project ”Image-guided brachytherapy with real-time navigated needle insertion”. The project is a collaborative research effort among the Department of Medical Physics, LMU Munich (LMU-PH), the Department of Radiation Oncology, LMU University Hospital (LMU-K), and the Department of Radiation Oncology at the

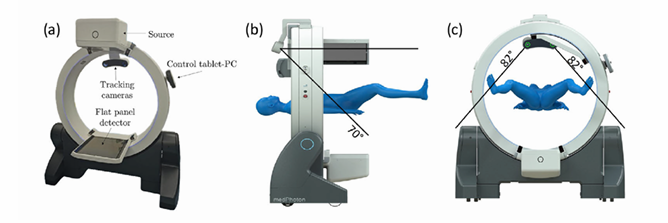

Universitatsklinikum Erlangen (UKER). These institutions share common interests in image-guided brachytherapy, and are equipped with the same robotic CBCT device, which integrates infrared tracking cameras (see Figure 1).

Figure 1: Shown is the setup of the robotic camera-CBCT system, which integrates two infra-red tracking cameras into the mobile ImagingRing m (a). The cameras enable tracking in a field of view of 70° and 82° in vertical (panel b) and horizontal (panel c) direction, respectively (adapted from [1] )

Project description & overall framework

Interventional radiation therapy (brachytherapy) su!ers from the lack of dedicated and flexible protocols for the quantitative assessment of needle insertion accuracy throughout the intervention. Despite the recent advances in the use of cone-beam CT (CBCT) imaging for guidance, the e!ective implementation of image-guided procedures is limited by procedural constraints. Also, the optimal trade-off between accurate 3D imaging on one hand and fast verification of the needle position during the procedure on the other is largely unexplored. The proposed project focuses therefore on specific developments towards the implementation of image-guided protocols in brachytherapy featuring real-time navigated needle insertion. We aim at exploiting the integration of infrared navigation technologies with mobile CBCT imaging to develop image-guided protocols, with the twofold aim to provide quantitative verification methods and improve image quality. This is intended to address brachytherapy treatments at di!erent anatomical sites, including those where image quality is degraded by the effects of intra-fractional motion. We also aim at leveraging Artificial intelligence (AI) methods to enable accurate and reliable needle tracking in X-ray imaging, thus maximizing the confidence level in the execution of accurate brachytherapy treatments.

The overall scientific goal of the proposed project will be achieved through the following specific objectives, as a collaborative research effort across the three research institutions: 1. to exploit integrated infrared tracking technologies for navigated needle insertion 2. to provide automated tracking of the needle position in X-ray verification images based on AI methods 3. to optimize imaging verification protocols relying on the best combination of 3D/sparse 2D imaging 4. to enhance CBCT image quality, especially for treatment sites undergoing significant breathing motion. The achievement of such objectives is expected to lead to the implementation of reliable image verification protocols in brachytherapy, complementing real-time guided needle insertion to maximize the accuracy.

PhD activities

In the proposed PhD project, the candidate is expected to contribute to the specific project objectives 2 and 3, leveraging previous expertise on 2D-3D registration [2, 3], AI-based X-ray tracking [4] and non-circular CBCT trajectories [5]. We will exploit the flexibility in imaging geometry enabled by the robotic CBCT scanner to design optimal imaging protocols for guidance and for the verification of the needle position. Optimized CBCT images, deriving from the other project work-packages, will be combined with sparse 2D projections to increase the confidence in needle placement, aiming at the optimal trade-off between accuracy and imaging dose.

The PhD project is split into two separate research tasks, as described in the following:

Research task 1: AI-based needle tracking.

Accurate tracking of the needle tip position is challenging, due to several factors, such as imaging artifacts, the small size of the object to be tracked, the variable object appearance depending on the available viewing angle, and the specific insertion trajectory. In this respect, AI based approaches are expected to be more robust against such variations compared to classical object detection algorithms. AI-based methods have been proposed and tested successfully for needle tracking in di!erent image modalities [6, 7, 8]. Validation of the algorithm performance will be carried out relying on three main datasets: (i) simulated X-ray projections, (ii) experimental acquisitions with needles at a known 3D location and (iii) retrospective clinical data where needles have been identified by an expert radiation-oncologist (in cooperation with LMU-K and UKER). Specific research tasks for this activity are as follows: 1. Literature comparison of classical and AI-based object detection frameworks 2. Development of a test phantom, featuring a needle holder/insertion guide that can be localized (IR tracking) 3. Data collection (a) Data simulation (b) Phantom acquisition (needles at known 3D position) (c) Retrospective clinical data 4. Implementation of the selected object detection framework (a) Data augmentation and network training (b) Testing on external data 5. Accuracy evaluation on simulated and experimental data.

Research task 2: 2D-3D image registration.

In this research task, the integration of data collected before and after needle placement will be addressed. In the attempt to reduce unnecessary imaging dose, we propose the use of an initial high-quality 3D CBCT image complemented by sparse X-ray projections to verify the final needle position in the daily patient anatomy. The 3D CBCT image acquired before implantation, representing a high-quality anatomical reference image, will be registered onto the X-ray projections acquired for the verification of the final needle position (2D-3D registration). This requires also the need to triangulate the needle position, as determined on the single projections , and to register that on the high-quality 3D CBCT image.

Such a registration needs to properly account for possible changes in the patient position between the acquisition of the 3D CBCT and the verification X-ray projections, under the assumption of no significant intra fractional changes. To accomplish this task, an existing 2D-3D registration framework will be exploited and compared to competitive AI-based approaches [9]. The implemented algorithms will be tested on retrospective data and simulations using a digital anthropomorphic phantom [10]. Specific research tasks are as follows: 1. Triangulation of needle tip position based on the extracted 2D location 2. Adaptation of the existing 2D-3D framework 3. Retrospective data collection (a) CT / CBCT images (b) CBCT projections 4. Data simulation (a) Anthropomorphic digital model / high-resolution CT (b) Simulation of ground-truth 2D projection 5. Comparison with deep-learning alternative 6. Implementation of a final demonstrator for 2D-3D data fusion.

Position description and working place

The research project will o!er a broad spectrum of tasks, including the further development of the existing algorithms and the realization of physical phantoms. The salary for both positions will be 75% TV-L E13 for a duration of 36 months. The working place will be at the Forschungszentrum Garching, which is well connected with public transportation to the city of Munich, including direct connection to the LMU University Hospital (LMU-K). The successful candidate will work in a highly motivated and well established team within a multi-disciplinary and international network embedded in a stimulating scientific environment, with a long tradition of collaboration and excellence in research. Disabled candidates are preferentially considered in case of equal qualification. Applications from women are highly encouraged. The candidate will be under the academic guidance of Professor Marco Riboldi.

Requirements & Qualifications

• MSc in Physics, experience in Medical Physics preferred

• Strong academic record

• Medical imaging and / or image processing background

• Fluent English knowledge (spoken and written), documented by qualification certificates (e.g. IELTS, TOEFL)

• Basic programming skills in Python, MATLAB or C++

• Technical proficiency, scientific creativity, team working skills

• Solid experimental skills

Application

If you are interested, please send your electronic application

(curriculum vitae, motivational letter, transcript of records and contact information or letters from two references) until 30/09/2025 to the following address:

Prof. Dr. Marco Riboldi

marco.riboldi@physik.uni-muenchen.de

References

[1] A Karius et al. “First implementation of an innovative infra-red camera system integrated into a mobile CBCT scanner for applicator tracking in brachytherapy-Initial performance characterization”. In: JAppl Clin Med Phys (2024), e14364. doi: 10.1002/acm2.14364.

[2] Palaniappan Prasannakumar et al. “X-ray CT adaptation based on a 2D–3D deformable image registration framework using simulated in-room proton radiographies”. In: Physics in Medicine and Biology 67.4 (2022), p. 045003. doi: 10.1088/1361-6560/ac4ed9.

[3] Palaniappan Prasannakumar et al. “Multi-stage image registration based on list-mode proton radiogra phies for small animal proton irradiation: A simulation study”. In: Zeitschrift fur medizinische Physik 34.4 (2024), pp. 521–532. doi: 10.1016/j.zemedi.2023.04.003.

[4] L Huang et al. “Simultaneous object detection and segmentation for patient-specific markerless lung tumor tracking in simulated radiographs with deep learning”. In: Med Phys (2024), pp. 1957–1973. doi: 10.1002/mp.16705.

[5] C Wei et al. “Reduction of cone-beam CT artifacts in a robotic CBCT device using saddle trajectories with integrated infrared tracking”. In: Med Phys (2024), pp. 1674–1686. doi: 10.1002/mp.16943.

[6] C Mwikirize, JL Nosher, and I Hacihaliloglu. “Convolution neural networks for real-time needle detection and localization in 2D ultrasound”. In: Int J Comput Assist Radiol Surg (2018), pp. 647–657. doi: 10. 1007/s11548-018-1721-y.

[7] A Mehrtash et al. “Automatic Needle Segmentation and Localization in MRI With 3-D Convolutional Neural Networks: Application to MRI-Targeted Prostate Biopsy”. In: IEEE Trans Med Imaging (2019), pp. 1026–1036. doi: 10.1109/TMI.2018.2876796.

[8] S Mukhopadhyay et al. “Deep learning based needle tracking in prostate fusion biopsy”. In: Proc SPIE (2021), pp. 605–613. doi: 10.1117/12.2580891.

[9] Jia Gong et al. “Di!Pose: Toward More Reliable 3D Pose Estimation”. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2023), pp. 13041–13051.

[10] WPSegarsetal. “4DXCATphantomformultimodality imaging research”. In: Med Phys (2010), pp. 4902 4915. doi: 10.1118/1.3480985.