ESTRO 2025 Congress Report | Physics track

Automated segmentation tools are increasingly used in radiotherapy to delineate organs-at-risk efficiently, yet their performance varies across patients. This highlights the continued need for quality assurance (QA) to ensure safe and accurate treatment plans.

The Issue: reduced model performance in CTs with metal artefacts

During retrospective QA of our in-house deep-learning segmentation model (nnU-Net), we observed that segmentation of the oral cavity was significantly worse in patients with metal artefacts in their planning CT scans compared with those without (surface Dice similarity coefficient (sDSC): 0.78 vs 0.83, p < 0.001). We hypothesised that these cases were under-represented in the training data, so that they were beyond the model’s knowledge (out-of-distribution, OOD).

Our aim: Can uncertainty serve as a QA signal?

Uncertainty quantification (UQ) reflects the confidence of a model in its predictions. We explored whether UQ could serve to flag OOD cases without prior knowledge of the model’s training distribution.

Methods

We trained two models:

- a baseline model that had been trained on 730 patients, 44% of whom had metal artefacts; and

- a no-metal model, trained on 730 patients without metal artefact cases.

Both models were tested on 10 patients with metal artefacts (OOD) and 10 without (in-distribution). We evaluated segmentation accuracy using sDSC and generated voxel-wise uncertainty maps using Monte-Carlo dropout and entropy. The number of high-uncertainty voxels (entropy > 0.3) was compared, and the receiver-operating characteristics (ROC) were analysed to assess how well uncertainty could identify OOD cases.

What we found

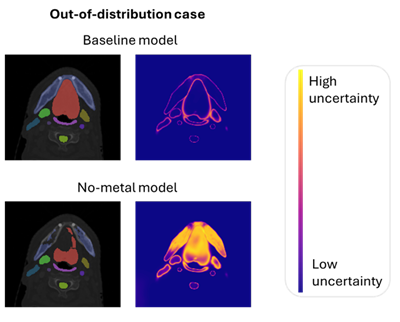

- Segmentation accuracy was lower for metal artefact cases compared with non-artefact cases, more so in the no-metal model (sDSC 0.65 vs 0.79) than in the baseline model (sDSC 0.78 vs 0.84). This indicates that inferior model performance was correlated with under-representation in the training data.

- Metal artefact cases had significantly more high-uncertainty voxels than did the non-artefact cases in the baseline model; notably, this difference was amplified in the no-metal model.

- ROC analysis showed that uncertainty could distinguish OOD from ID cases with high accuracy (area under the curve: 0.82 baseline, 0.89 no-metal).

Conclusion: UQ supports QA

We saw that under-representation of cases led to both reduced segmentation accuracy and elevated uncertainty. This highlights UQ as a valuable tool for the identification of cases in which the model may be less reliable due to limited training exposure. This supports the use of a patient-specific QA approach that involves the provision of a method to flag potentially unreliable cases for targeted review.

Figure 1. Auto-segmentation and uncertainty maps for one metal artefact and one non-metal artefact case, using both the baseline and no-metal models. In the metal artefact case, segmentation performance worsens with the no-metal model and uncertainty increases noticeably in the oral cavity (structure in red).

Joëlle van Aalst

PhD candidate

Department of Radiation Oncology

University Medical Centre Groningen

Groningen, The Netherlands

j.e.van.aalst@umcg.nl

https://www.linkedin.com/in/joelle-van-aalst/